Document Term Matrix

I like to think Document Term Matrix (DTM) as a implementation of the Bag of Words concept. Document Term Matrix is tracking the term frequency for each term by each document. You start with the Bag of Words representation of the documents and then for each document, you track the number of time a term exists. Term count is a common metric to use in a Document Term Matrix but it is not the only metric. In a future post I will discuss some common functions used to calculate Term Frequencies but for this post I will use term counts.

How to Calculate a Document Term Matrix

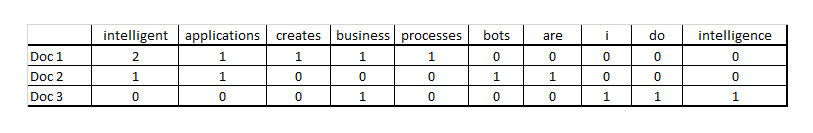

Let’s look at 3 simple documents.

- Intelligent applications creates intelligent business processes

- Bots are intelligent applications

- I do business intelligence

The Document Term Matrix for the three documents is:

We now have a simple numerical representation of the document which will allow further processing or analytical investigation. It should be very easy to see that the DTM representation contains each distinct term from the corpus ( a collection of documents) and the count of each distinct term in each document. For example, the word intelligent exists in Doc 1 twice, Doc 2 once, and not at all in Doc 3. A Document Term matrix can become a very large, sparse matrix depending on the number of documents in the corpus and the number of terms in each document. Thanks to the wonders of fast processors and lots of memory, a large, sparse matrix is nothing for our computers to handle.

Why do We Need Document Term Matrix

Document Term Matrix creates a numerical representation of the documents in our corpus. A corpus is just a collection of documents. With this “larger” bag of words, we can do more interesting analytics. It is easy to determine individual word counts for each document or for all documents. We can now calculate aggregates and basic statistics such as average term count, mean, median, mode, variance and standard deviation of the length of the documents. We can also tell which terms are more frequent in the collection of documents and can use that information to determine which terms more likely “represent” the document.

The DTM representation is a fairly simple way to represent the documents as a numeric structure. Representing text as a numerical structure is a common starting point for text mining and analytics such as search and ranking, creating taxonomies, categorization, document similarity, and text-based machine learning. If you want to compare two documents for similarity you will usually start with numeric representation of the documents. If you want to do machine learning magic on your documents you may start by creating a DTM representation on the documents and using data derived from the representation as features.

Final Word on Document Term Matrix

Bag of Words and Document Term Matrix is a simple way to numerically represent your documents for further mining and analytics. For most programming languages you can simply include a third-party library and easily create a DTM representation of your corpus. If you are working in the .NET world chances are you might be creating your own DTM utility. The ability to represent your text data as a number-based representation is a key feature of working with text data as text.

In the next post we will take a closer look at how term frequencies can be calculated.